- Ofcom finalises child safety measures for sites and apps to introduce from July

- Tech firms must act to prevent children from seeing harmful content

- Changes will mean safer social feeds, strong age checks and more help and control for children online

Children in the UK will have safer online lives, under transformational new protections finalised by Ofcom today.

We are laying down more than 40 practical measures for tech firms to meet their duties under the Online Safety Act. These will apply to sites and apps used by UK children in areas such as social media, search and gaming. This follows consultation and research involving tens of thousands of children, parents, companies and experts.

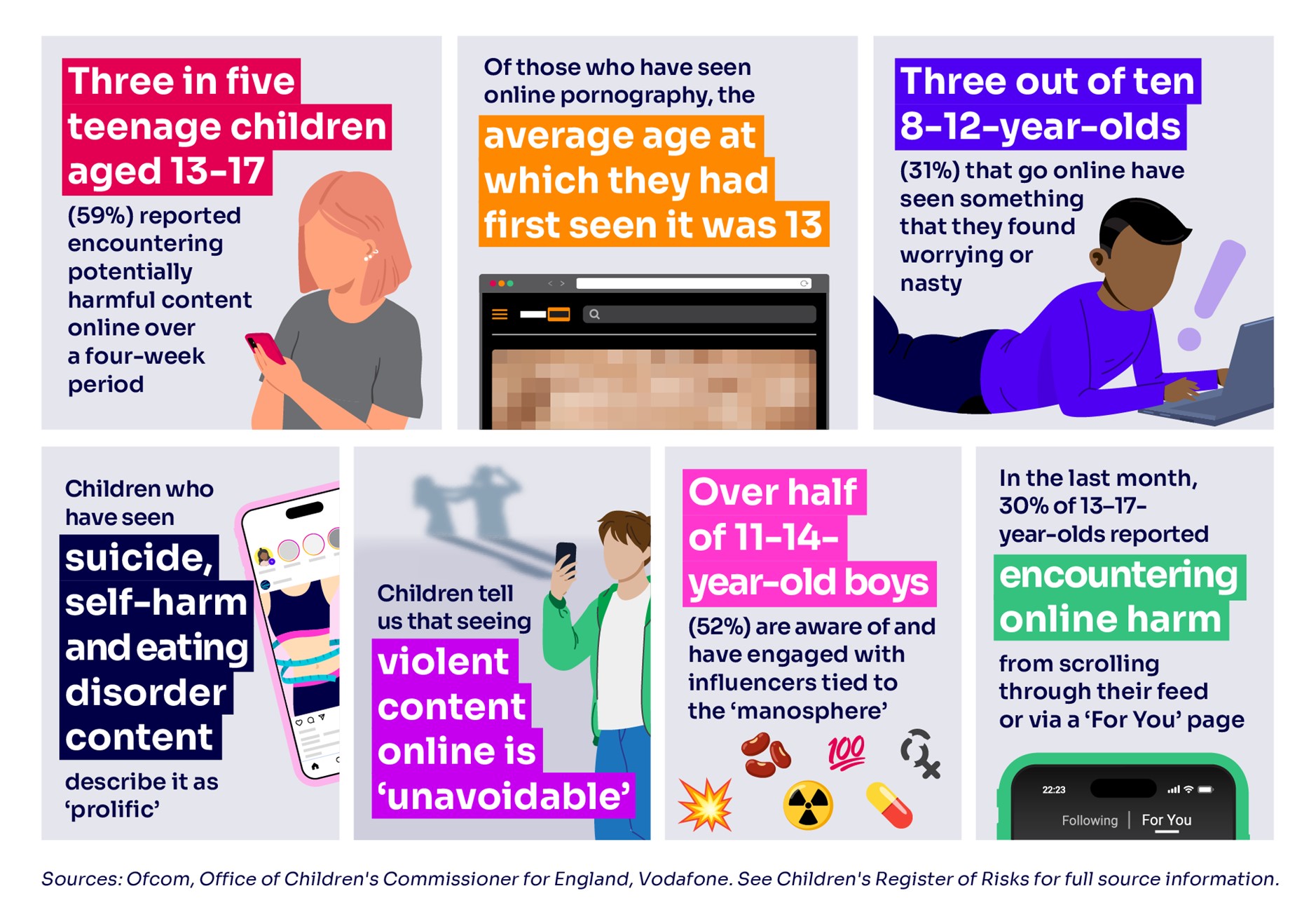

The steps include preventing minors from encountering the most harmful content relating to suicide, self-harm, eating disorders and pornography. Online services must also act to protect children from misogynistic, violent, hateful or abusive material, online bullying and dangerous challenges.

Dame Melanie Dawes, Ofcom Chief Executive, said: “These changes are a reset for children online. They will mean safer social media feeds with less harmful and dangerous content, protections from being contacted by strangers and effective age checks on adult content. Ofcom has been tasked with bringing about a safer generation of children online, and if companies fail to act they will face enforcement.”

Gen Safer: what we expect

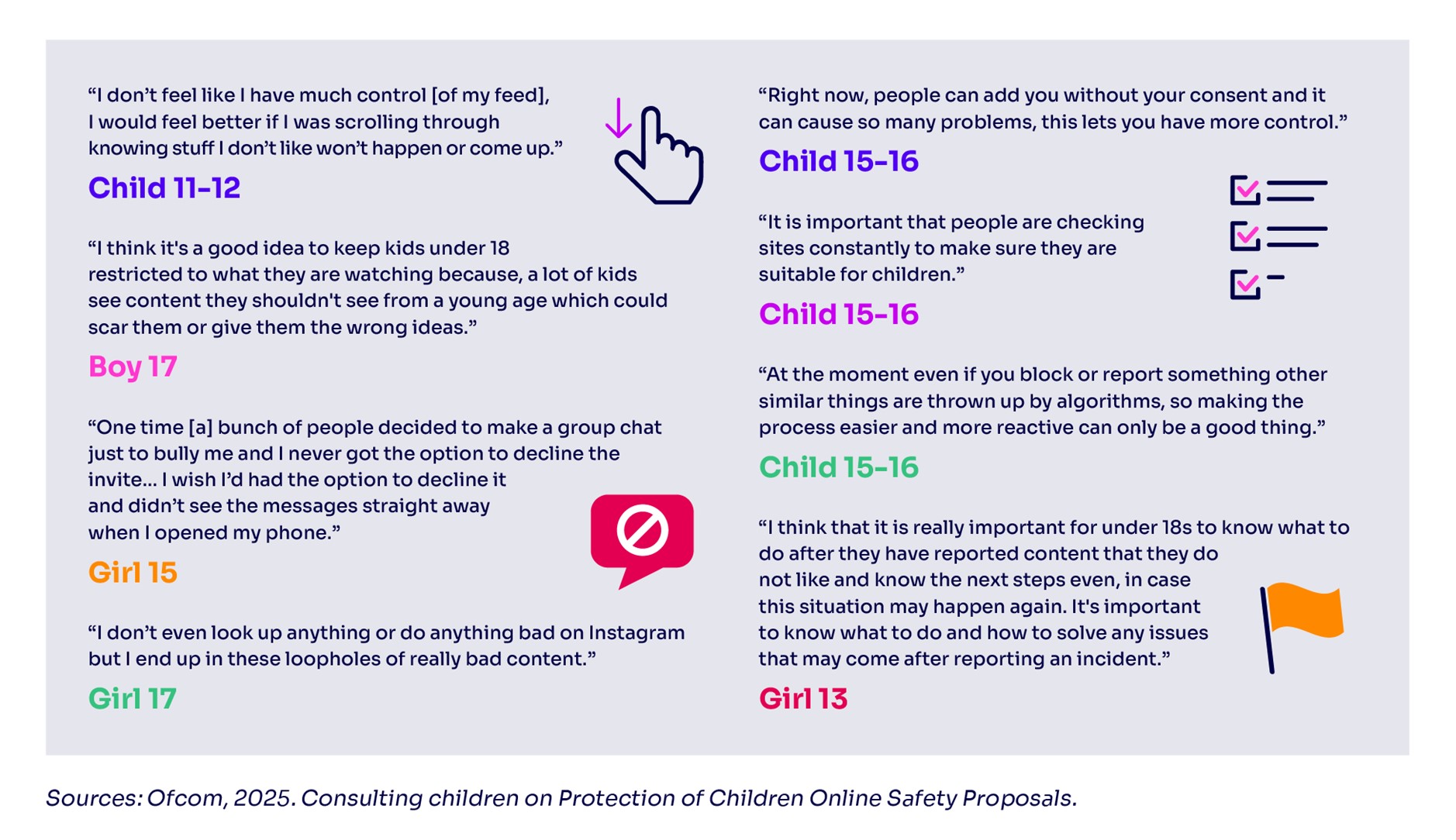

In designing the Codes of Practice finalised today, our researchers heard from over 27,000 children and 13,000 parents, alongside consultation feedback from industry, civil society, charities and child safety experts. We also conducted a series of consultation workshops and interviews with children from across the UK to hear their views on our proposals in a safe environment. [1]

Taking these views into account, our Codes demand a ‘safety-first’ approach in how tech firms design and operate their services in the UK. The measures include:

- Safer feeds. Personalised recommendations are children’s main pathway to encountering harmful content online. Any provider that operates a recommender system and poses a medium or high risk of harmful content must configure their algorithms to filter out harmful content from children’s feeds.

- Effective age checks. The riskiest services must use highly effective age assurance to identify which users are children. This means they can protect them from harmful material, while preserving adults’ rights to access legal content. That may involve preventing children from accessing the entire site or app, or only some parts or kinds of content. If services have minimum age requirements but are not using strong age checks, they must assume younger children are on their service and ensure they have an age-appropriate experience.

- Fast action. All sites and apps must have processes in place to review, assess and quickly tackle harmful content when they become aware of it.

- More choice and support for children. Sites and apps are required to give children more control over their online experience. This includes allowing them to indicate what content they don’t like, to accept or decline group chat invitations, to block and mute accounts and to disable comments on their own posts. There must be supportive information for children who may have encountered, or have searched for harmful content.

- Easier reporting and complaints. Children will find it straightforward to report content or complain, and providers should respond with appropriate action. Terms of service must be clear so children can understand them.

- Strong governance. All services must have a named person accountable for children’s safety, and a senior body should annually review the management of risk to children.

These measures build on the rules we’ve already put in place to protect users, including children, from illegal online harms – including grooming. They also complement specific requirements for porn sites to prevent children from encountering online pornography.

What happens next

Providers of services likely to be accessed by UK children now have until 24 July to finalise and record their assessment of the risk their service poses to children, which Ofcom may request. They should then implement safety measures to mitigate those risks [2]. From 25 July 2025, they should apply the safety measures set out in our Codes to mitigate those risks. [3]

If companies fail to comply with their new duties, Ofcom has the power to impose fines and – in very serious cases – apply for a court order to prevent the site or app from being available in the UK.

Today’s Codes of Practice are the basis for a new era of child safety regulation online. We will build on them with further consultations, in the coming months, on additional measures to protect users from illegal material and harms to children. [5]

ENDS

Notes to editors

- We shared age-appropriate proposals with a group of 112 children, who discussed and debated their views in a deliberative engagement programme.

- In March, we launched an enforcement programme into child sexual abuse imagery on file-sharing services. Earlier this month, we launched an investigation into whether the provider of an online suicide forum has failed to comply with its duties under the UK’s Online Safety Act.

- Today, the UK Government has laid our Codes before Parliament for approval, per the Act’s requirements. Subject to our Codes completing the Parliamentary process as expected, from 25 July providers will have to apply the safety measures set out in our Codes (or take alternative action to meet their duties) to mitigate the risks that their services pose to children.

- We have also published information and advice that explains to parents what our measures mean in practice, tips on keeping children safe online and where they can get further support – visit ofcom.org.uk/parents. This includes a video explaining the changes to children. Alongside this, we have released new video content featuring real questions, from real parents, that they want to ask social media companies about the harmful content children come across online. Watch the video in full here.

- We will be publishing shortly a consultation on additional measures to further strengthen the protections that we expect services to take, including to:

- ban the accounts of people found to have shared child sexual abuse material (CSAM);

- introduce crisis response protocols for emergency events;

- use hash matching to prevent the sharing of non-consensual intimate imagery and terrorist content;

- tackle illegal harms, including CSAM, through the use of AI;

- use highly effective age assurance to protect children from grooming; and

- we recognise that livestreaming can create particular risks for children. Our upcoming consultation will set out the evidence surrounding livestreaming, and make proposals to reduce these risks.